In the spring of 2014, Facebook launched its Connectivity Lab. The idea was to build all sorts of new technologies that could more efficiently stretch the Internet to the rest of the world—and thus stretch Facebook to the rest of the world. But this is no simple thing. Building a flying Internet drone—a contraption that can circle the stratosphere and beam wireless signals down to Earth—is an enormous undertaking, in terms of time, technology, and money.

‘It changes, fundamentally, how our communications systems have to be developed.’YAEL MAGUIRE, FACEBOOK

Given all that effort and expense, it doesn’t really make sense for drones to beam signals into areas that don’t include real live people. You might think it’s easy to figure out where the people are. But the Earth is a mighty big place. “We realized we couldn’t answer that question—and it’s a very basic question,” says Yael Maguire, who oversees the Facebook Connectivity Lab. He describes it as a “needle-in-the-haystack problem.” Ninety-nine percent of the Earth does not include human life.

So Facebook turned to artificial intelligence. Maguire and his team used what’s called deep learning to create a global map that shows how their new Internet tech can most efficiently reach the world’s population. “We wanted to build a map for what the best technologies would be,” Maguire says.

Sending A Signal

Drawing on services provided by the company’s AI Lab, a Facebook engineer and optical physicist named Tobias Tiecke built a system that can automatically analyze satellite images of the Earth’s surface and determine where people are actually living. This insight, Maguire explains, is now guiding how the company builds those flying drones. In fact, he says, it has shown that the company’s original approach to drone-powered Internet access was all wrong.

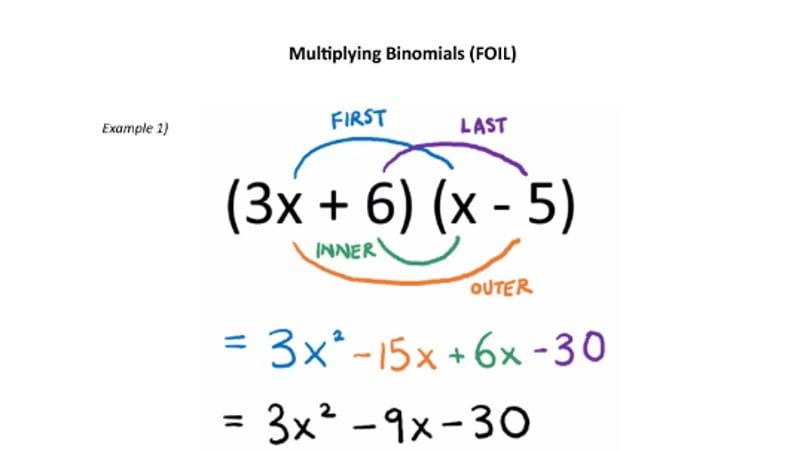

Deep learning relies on neural networks—networks of hardware and software that approximate the web of neurons in the human brain. If you feed enough photos of a goat into a neural network, it can learn to identify a goat. If you feed enough spoken words into a neural net, it can learn to recognize the commands you speak into your smartphone. In much the same way, it can analyze satellite photos and learn to recognize where people are living.

For something like this to work, you needlabeled data. In other words, humans must identify some good examples before they’re fed into a neural net. They must label a sample set of goat photos, for instance, as goat photos. The same goes for Facebook’s new project—but there’s a twist. The human trainers didn’t bother to label specific evidence of civilization. They didn’t label houses or cars or roads or farmland as a way of training the neural net. Given a photo, the humans simply noted whether or not the photo showed any sign that people were living there. “We just asked: ‘Is there a human artifact in this image or not?” says Maguire. “Binary question. Yes or no.”

Given this basic information for a relatively small number of photos—about 8,000 overhead images of India—the neural net could then identify evidence of human life across photos of about twenty other countries. In total, the system analyzed 15.6 billion images representing 21.6 million square kilometers of Earth. Using that sample of binary information—8,000 photos labeled as either containing a human artifact or not—the neural net could accurately identify other human settlements in other locations. “Just based on that information, the algorithm can then go out and find all sorts of human artifacts,” Maguire says. The error rate, he says, is less than 10 percent.

It doesn’t really make sense for drones to beam signals into areas that don’t include any real live people.

The simplicity of this approach may seem surprising. It was certainly surprising to Maguire. But deep neural nets work insometimes surprising ways. And the aim is to build a classifier—a way of identifying photos or spoken words or other data—that’s as simple as possible. “When you’re building a classifier like Facebook’s, the more categories you ask the [neural] net to use, the harder the problem becomes, both in terms of computation and neural net tuning,” says Chris Nicholson, the CEO and founder of a deep learning startup called Skymind. “So for the sake of efficiency, you want to draw a line on how sophisticated of a classifier you need. Facebook chose to do something really simple, but if that fulfills their goal, then great.”

In they end, they can build a vast map of human artifacts with a resolution of about 5 meters. In other words, they pretty much known whether there’s evidence of human life in every 5-square-meter space across 20 countries. Then, by combining this information with census data, they can map the population density in those countries. And that’s a powerful thing.

[“Source-wired”]