THE HYPE OVER MOOCs peaked in 2012. Salman Khan, an investment analyst who had begun teaching bite-sized lessons to his cousin in New Orleans over the internet and turned that activity into a wildly popular educational resource called the Khan Academy, was splashed on the cover of Forbes. Sebastian Thrun, the founder of another MOOC called Udacity, predicted in an interview in Wired magazine that within 50 years the number of universities would collapse to just ten worldwide. The New York Times declared it the year of the MOOC.

THE HYPE OVER MOOCs peaked in 2012. Salman Khan, an investment analyst who had begun teaching bite-sized lessons to his cousin in New Orleans over the internet and turned that activity into a wildly popular educational resource called the Khan Academy, was splashed on the cover of Forbes. Sebastian Thrun, the founder of another MOOC called Udacity, predicted in an interview in Wired magazine that within 50 years the number of universities would collapse to just ten worldwide. The New York Times declared it the year of the MOOC.

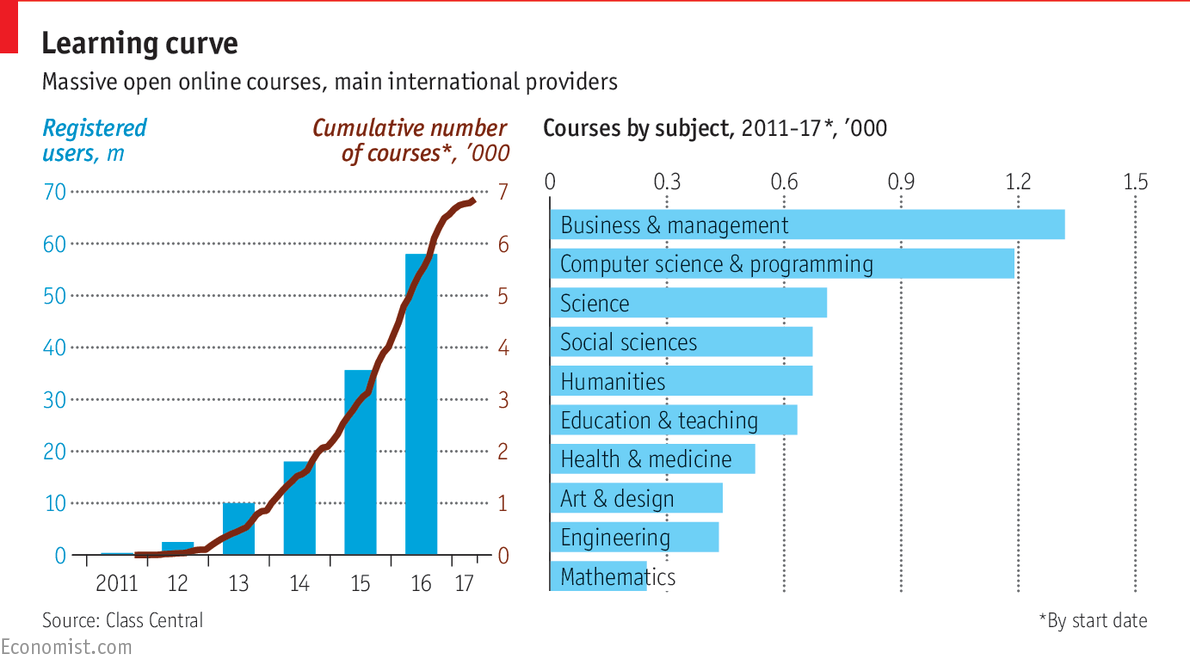

The sheer numbers of people flocking to some of the initial courses seemed to suggest that an entirely new model of open-access, free university education was within reach. Now MOOC sceptics are more numerous than believers. Although lots of people still sign up, drop-out rates are sky-high.

Nonetheless, the MOOCs are on to something. Education, like health care, is a complex and fragmented industry, which makes it hard to gain scale. Despite those drop-out rates, the MOOCs have shown it can be done quickly and comparatively cheaply. The Khan Academy has 14m-15m users who conduct at least one learning activity with it each month; Coursera has 22m registered learners. Those numbers are only going to grow. FutureLearn, a MOOC owned by Britain’s Open University, has big plans. Oxford University announced in November that it would be producing its first MOOC on the edX platform.

In their search for a business model, some platforms are now focusing much more squarely on employment (though others, like the Khan Academy, are not for profit). Udacity has launched a series of nanodegrees in tech-focused courses that range from the basic to the cutting-edge. It has done so, moreover, in partnership with employers. A course on Android was developed with Google; a nanodegree in self-driving cars uses instructors from Mercedes-Benz, Nvidia and others. Students pay $199-299 a month for as long as it takes them to finish the course (typically six to nine months) and get a 50% rebate if they complete it within a year. Udacity also offers a souped-up version of its nanodegree for an extra $100 a month, along with a money-back guarantee if graduates do not find a job within six months.

Coursera’s content comes largely from universities, not specialist instructors; its range is much broader; and it is offering full degrees (one in computer science, the other an MBA) as well as shorter courses. But it, too, has shifted its emphasis to employability. Its boss, Rick Levin, a former president of Yale University, cites research showing that half of its learners took courses in order to advance their careers. Although its materials are available without charge, learners pay for assessment and accreditation at the end of the course ($300-400 for a four-course sequence that Coursera calls a “specialisation”). It has found that when money is changing hands, completion rates rise from 10% to 60% . It is increasingly working with companies, too. Firms can now integrate Coursera into their own learning portals, track employees’ participation and provide their desired menu of courses.

These are still early days. Coursera does not give out figures on its paying learners; Udacity says it has 13,000 people doing its nanodegrees. Whatever the arithmetic, the reinvented MOOCs matter because they are solving two problems they share with every provider of later-life education.

The first of these is the cost of learning, not just in money but also in time. Formal education rests on the idea of qualifications that take a set period to complete. In America the entrenched notion of “seat time”, the amount of time that students spend with school teachers or university professors, dates back to Andrew Carnegie. It was originally intended as an eligibility requirement for teachers to draw a pension from the industrialist’s nascent pension scheme for college faculty. Students in their early 20s can more easily afford a lengthy time commitment because they are less likely to have other responsibilities. Although millions of people do manage part-time or distance learning in later life—one-third of all working students currently enrolled in America are 30-54 years old, according to the Georgetown University Centre on Education and the Workforce—balancing learning, working and family life can cause enormous pressures.

Moreover, the world of work increasingly demands a quick response from the education system to provide people with the desired qualifications. To take one example from Burning Glass, in 2014 just under 50,000 American job-vacancy ads asked for a CISSP cyber-security certificate. Since only 65,000 people in America hold such a certificate and it takes five years of experience to earn one, that requirement will be hard to meet. Less demanding professions also put up huge barriers to entry. If you want to become a licensed cosmetologist in New Hampshire, you will need to have racked up 1,500 hours of training.

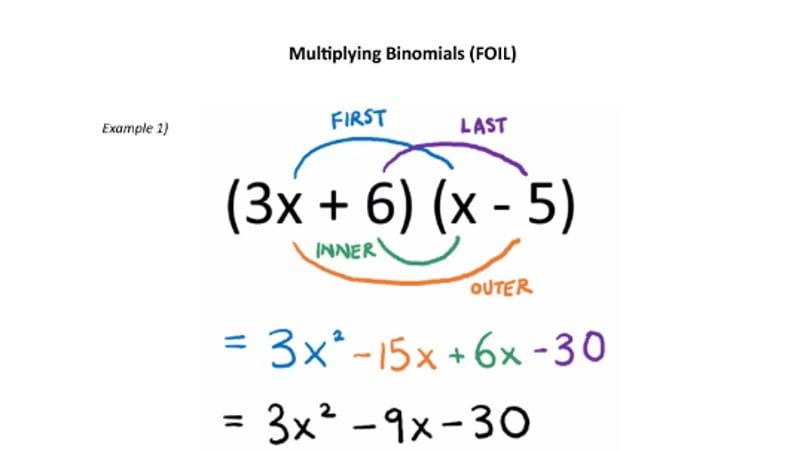

In response, the MOOCs have tried to make their content as digestible and flexible as possible. Degrees are broken into modules; modules into courses; courses into short segments. The MOOCs test for optimal length to ensure people complete the course; six minutes is thought to be the sweet spot for online video and four weeks for a course.

Scott DeRue, the dean of the Ross School of Business at the University of Michigan, says the unbundling of educational content into smaller components reminds him of another industry: music. Songs used to be bundled into albums before being disaggregated by iTunes and streaming services such as Spotify. In Mr DeRue’s analogy, the degree is the album, the course content that is freely available on MOOCs is the free streaming radio service, and a “microcredential” like the nanodegree or the specialisation is paid-for iTunes.

How should universities respond to that kind of disruption? For his answer, Mr DeRue again draws on the lessons of the music industry. Faced with the disruption caused by the internet, it turned to live concerts, which provided a premium experience that cannot be replicated online. The on-campus degree also needs to mark itself out as a premium experience, he says.

Another answer is for universities to make their own products more accessible by doing more teaching online. This is beginning to happen. When Georgia Tech decided to offer an online version of its masters in computer science at low cost, many were shocked: it seemed to risk cannibalising its campus degree. But according to Joshua Goodman of Harvard University, who has studied the programme, the decision was proved right. The campus degree continued to recruit students in their early 20s whereas the online degree attracted people with a median age of 34 who did not want to leave their jobs. Mr Goodman reckons this one programme could boost the numbers of computer-science masters produced in America each year by 7-8%. Chip Paucek, the boss of 2U, a firm that creates online degree programmes for conventional universities, reports that additional marketing efforts to lure online students also boost on-campus enrolments.

Educational Lego

Universities can become more modular, too. EdX has a micromasters in supply-chain management that can either be taken on its own or count towards a full masters at MIT. The University of Wisconsin-Extension has set up a site called the University Learning Store, which offers slivers of online content on practical subjects such as project management and business writing. Enthusiasts talk of a world of “stackable credentials” in which qualifications can be fitted together like bits of Lego.

Just how far and fast universities will go in this direction is unclear, however. Degrees are still highly regarded, and increased emphasis on critical thinking and social skills raises their value in many ways. “The model of campuses, tenured faculty and so on does not work that well for short courses,” adds Jake Schwartz, General Assembly’s boss. “The economics of covering fixed costs forces them to go longer.”

Academic institutions also struggle to deliver really fast-moving content. Pluralsight uses a model similar to that of book publishing by employing a network of 1,000 experts to produce and refresh its library of videos on IT and creative skills. These experts get royalties based on how often their content is viewed; its highest earner pulled in $2m last year, according to Aaron Skonnard, the firm’s boss. Such rewards provide an incentive for authors to keep updating their content. University faculty have other priorities.

Beside costs, the second problem for MOOCs to solve is credentials. Close colleagues know each other’s abilities, but modern labour markets do not work on the basis of such relationships. They need widely understood signals of experience and expertise, like a university degree or a baccalaureate, however imperfect they may be. In their own fields, vocational qualifications do the same job. The MOOCs’ answer is to offer microcredentials like nanodegrees and specialisations.

But employers still need to be confident that the skills these credentials vouchsafe are for real. LinkedIn’s “endorsements” feature, for example, was routinely used by members to hand out compliments to people they did not know for skills they did not possess, in the hope of a reciprocal recommendation. In 2016 the firm tightened things up, but getting the balance right is hard. Credentials require just the right amount of friction: enough to be trusted, not so much as to block career transitions.

Universities have no trouble winning trust: many of them can call on centuries of experience and name recognition. Coursera relies on universities and business schools for most of its content; their names sit proudly on the certificates that the firm issues. Some employers, too, may have enough kudos to play a role in authenticating credentials. The involvement of Google in the Android nanodegree has helped persuade Flipkart, an Indian e-commerce platform, to hire Udacity graduates sight unseen.

Wherever the content comes from, students’ work usually needs to be validated properly for a credential to be trusted. When student numbers are limited, the marking can be done by the teacher. But in the world of MOOCs those numbers can spiral, making it impractical for the instructors to do all the assessments. Automation can help, but does not work for complex assignments and subjects. Udacity gets its students to submit their coding projects via GitHub, a hosting site, to a network of machine-learning graduates who give feedback within hours.

Even if these problems can be overcome, however, there is something faintly regressive about the world of microcredentials. Like a university degree, it still involves a stamp of approval from a recognised provider after a proprietary process. Yet lots of learning happens in informal and experiential settings, and lots of workplace skills cannot be acquired in a course.

Gold stars for good behaviour

One way of dealing with that is to divide the currency of knowledge into smaller denominations by issuing “digital badges” to recognise less formal achievements. RMIT University, Australia’s largest tertiary-education institution, is working with Credly, a credentialling platform, to issue badges for the skills that are not tested in exams but that firms nevertheless value. Belinda Tynan, RMIT’s vice-president, cites a project carried out by engineering students to build an electric car, enter it into races and win sponsors as an example.

The trouble with digital badges is that they tend to proliferate. Illinois State University alone created 110 badges when it launched a programme with Credly in 2016. Add in MOOC certificates, LinkedIn Learning courses, competency-based education, General Assembly and the like, and the idea of creating new currencies of knowledge starts to look more like a recipe for hyperinflation.

David Blake, the founder of Degreed, a startup, aspires to resolve that problem by acting as the central bank of credentials. He wants to issue a standardised assessment of skill levels, irrespective of how people got there. The plan is to create a network of subject-matter experts to assess employees’ skills (copy-editing, say, or credit analysis), and a standardised grading language that means the same thing to everyone, everywhere.

Pluralsight is heading in a similar direction in its field. A diagnostic tool uses a technique called item response theory to work out users’ skill levels in areas such as coding, giving them a rating. The system helps determine what individuals should learn next, but also gives companies a standardised way to evaluate people’s skills.

A system of standardised skills measures has its own problems, however. Using experts to grade ability raises recursive questions about the credentials of those experts. And it is hard for item response theory to assess subjective skills, such as an ability to construct an argument. Specific, measurable skills in areas such as IT are more amenable to this approach.

So amenable, indeed, that they can be tested directly. As an adolescent in Armenia, Tigran Sloyan used to compete in mathematical Olympiads. That experience helped him win a place at MIT and also inspired him to found a startup called CodeFights in San Francisco. The site offers free gamified challenges to 500,000 users as a way of helping programmers learn. When they know enough, they are funnelled towards employers, which pay the firm 15% of a successful candidate’s starting salary. Sqore, a startup in Stockholm, also uses competitions to screen job applicants on behalf of its clients.

However it is done, the credentialling problem has to be solved. People are much more likely to invest in training if it confers a qualification that others will recognise. But they also need to know which skills are useful in the first place.

[“source-ndtv”]